Your complimentary articles

You’ve read one of your four complimentary articles for this month.

You can read four articles free per month. To have complete access to the thousands of philosophy articles on this site, please

Logic

How To Be Much Cleverer Than All Your Friends (so they really hate you)

Part I: Design for a Superbeing. By Mike Alder.

Long, long ago, before Philosophy Now was even a gleam in its editor’s eye, there were bright and lively-minded people around, just like you. People who liked new ideas, liked a bit of intellectual stimulation, enjoyed debate and discussion, people who liked to use their brains. And what did they do when there was no Philosophy Now? Well, some of them used to read Science Fiction.

In these latter, degenerate days when Science Fiction mainly means movies, and furthermore movies which are of the leave-your-brain-where-you-buy-your-pop-corn-and-pick-it-up-on-the-way-out category, this may seem unlikely; but all us old wrinklies who were around before mobile phones know it’s true. It used to come in things called books, and they were genuine science fiction, not just tales of dragons and elves, and bold warriors with IQs lower than that of their swords. The stories, it has to be admitted, were every bit as silly as Star Wars, and the characterisation even worse. But in the good old days now gone, there were ideas that knocked your socks off. For an example, one I’ve mentioned before when discussing the sins of Miss Blackmore, take A.E. van Vogt’s World of Null-A. Two ideas come up in the book: one is that the hero has two brains and the second is that he can think in a non-Aristotelian logic. The combination makes the hero ever so clever, able to out-think lesser mortals stuck with ordinary logic, and to defeat the bad guys with relative ease. Only relative ease, or the book would be rather short, but he defeats an evil galactic overlord and gets the girl, and no hero can reasonably expect to do better than that. All done, moreover, by superior thought rather than bulgier muscles, bigger zap-guns, or some kind of moral superiority associated with being sentimental as in the case of Captain Kirk. To the small adolescent lad I used to be, not conspicuous for either bulgy muscles, sentiment or moral superiority, this had definite appeal.

Some ideas turn out, after close and critical thought, to be pure tosh. So much so that whole organisations are devoted to stamping out close and critical thought or at least pointing it somewhere else. The principal idea in the Star Wars movies is that the Universe allows what used to be known as magic, which is mastered by listening to Master Yoda passing on his profound insights. These sound the sort of thing that come in fortune cookies or Christmas crackers, and one would have to regretfully conclude that the central idea in Star Wars is complete tosh. What is rather striking about both the ideas in World of Null-A is that they aren’t. In fact both are applicable to you. You do have two brains. And you can, in principle, learn to think in a non-Aristotelian logic. And if you master the skill, it really does make you more intelligent in an objective sense, namely, better able to predict the world, than those who can’t. So read on and I shall explain how to think in a null-A logic, and explain what needs to be done in order to get good at it and develop a superior intellect. You probably won’t be able to teleport as van Vogt’s hero could; that part we have to admit was pure fiction. But there is a language based on a non-Aristotelian Logic. And you can learn to think in it.

I have to admit now, to avoid disappointment later, that there is a down side to this. For starters, no evil galactic overlords will be provided for thwarting purposes, nor any girls for any purposes whatever. Giving you a superior mind will certainly make it hard for you to put up with the lesser abilities of your friends, and will also make it even harder for them to put up with you. But it has to be confessed that these are not the main drawbacks. After all, if I offered to make you ten centimetres taller I could do it: there’s a device called a rack which will accomplish it quite quickly. The trouble is, it’s rather painful. And given the choice of being stretched on the rack or learning to think in a non-Aristotelian logic, level-headed people might well consider the rack the softer option. So be prepared for a tough time in the remainder of this article, and an even tougher time if you decide to go the whole hog and become a super-being.

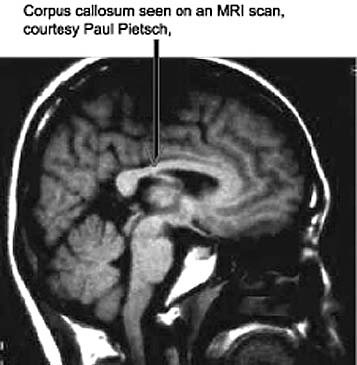

Touching on the two brains aspect first; Roger Sperry got half a Nobel Prize (maybe it should have been two) for showing that people who had the corpus callosum severed showed signs of distinct personalities, one in each hemisphere of the brain. The corpus callosum is a big nerve trunk which connects the two hemispheres, and cutting it was done in cases of extreme epilepsy in order to prevent the brain from going wholly into the kind of spasm that manifests itself as an epileptic attack. Presenting pictures of half-naked ladies to one hemisphere of a male subject got pursed lips and a disapproving frown, the other hemisphere of the same subject giggled. This doesn’t happen with most people, presumably because one hemisphere or the other wins some sort of internal debate about the best response, the argument being conducted via the corpus callosum. Unless you have had some currently unfashionable surgery, you are probably well equipped in this regard, which is just as well, or you would be like the two headed pushmi-pullyu, and would be in serious trouble if you had a fundamental disagreement of values.

Below is a picture of a brain showing the corpus callosum. (You can also see it at the website.)

Disagreement between the two hemispheres may manifest itself as adolescent angst or chronic indecisiveness, who knows? But that you have two hemispheres, and that each is capable of some sort of intellectual activity, is not in serious doubt. The reality is much richer than the simple tales told to the credulous by the optimistic, and worthy of some investigation, but for the present it suffices to make the claim that you indeed have two brains. You are not alone in this. We all do.

And yes, you will need both of them if you decide to master thinking in a non-Aristotelian logic.

For those who came in late, or even for those familiar with the sins of Miss Blackmore but with less than perfect memories, first some words of wisdom about the ordinary, common or garden, Aristotelian logic.

Classical Logic, first codified by Aristotle (hence van Vogt’s habit of attaching his name to it), is all about the rules for correct argument. Two things should be noticed: first, everyone over the age of ten has some grasp of logic. We work it out by inferring the rules of what words mean, in particular how all and and and not and or are used. There is a remarkable ability of human brains to extract rules from data, and we do it a great deal in childhood, somewhat less frequently in later life. As a result of listening to logical arguments, those who did have some idea of how to follow and produce a logical argument themselves, having extracted the rules from samples. It has to be faced that some of us are better at it than others. The second thing to notice is that having extracted rules, these rules can be written down and listed. A rat could learn that when the bell rings it is safe to reach for the food and when the buzzer sounds it is a good idea to get off the metal plate before you get zapped. It has extracted a rule from data. But it takes a human being to write the rule down and pass it on to its young. And some of the human beings who have worked out what the rules of valid argument are, have obligingly written them down for us. This allows even those of us who aren’t smart enough to work out all of them to have access to the complete list.

Aristotle classified the valid syllogisms; the first (called AAA) is:

All A’s are B’s,

All B’s are C’s

Therefore all A’s are C’s.

The second (EAE) is

Some A’s are B’s,

All B’s are C’s

Therefore some A’s are C’s.

There are lots more of them.

You should have no difficulty checking that these are legitimate arguments whenever you replace the capital letters by anything you want: thus if all human beings are mammals and all mammals are animals, then it must be true that all human beings are animals, whether you like this conclusion or not. On the other hand, the equivalent looking

Some A’s are B’s,

Some B’s are C’s

Therefore some A’s are C’s

is not a valid syllogism. There are some choices of A, B and C which make it fail.

And good old Aristotle provided a comprehensive list of all the valid syllogisms, and for over a thousand years, schoolchildren were forced to learn Latin rhymes in order to memorise them.

The psychologist Piaget concluded that the final stage of intellectual development of the child is this ability to extract the rules of logic. By this he seems to mean that in their later teens, most people can extract the rules from hearing other people use them without being able to say what the rules are. They know them in the sense of being able to follow them, but not in the sense of being able to state them. Obviously the second level of knowing the rules, being able to say what they actually are, is more difficult if you have to work them out for yourself, but they should be easy to recognise when someone has already done the hard part of writing them down in a rule book. Piaget doesn’t say at what age the child figures out how to write down the rules of Logic, but it must be on the high side since most people never get there.

Why should we care about the rule book? Well, if arguments get complicated, you can either fall back on guessing what the right rules are, or you can use the rule book if you happen to know it. So for many centuries, part of every Western-educated person’s background consisted of learning the rules as laid down by Aristotle. It was supposed to make them better at producing and following arguments.

As with most educational theories, nobody bothered to find out if this actually worked. After all, the only people qualified to judge had already learnt the laws of logic, and being educated meant being like them.

Don’t laugh, your own education has been based on even sillier ideas.

Other parts of classical logic also centred on when one could be sure of the truth of propositions if one was sure of the truth of other propositions. For any proposition, A, there was a negation, written ~A, which was the denial of A. Then one can write out some of the laws of logic in a very algebraic looking way:

(1) A + ~A = 1

(2) A . ~A = 0

where + is short for ‘or’ and . is short for ‘and’. George Boole did this in the nineteenth century. Maths strikes again.

The first law above (the ‘law of the excluded middle’) says that any proposition is either true or false. The second law says that to assert something and it’s negation is to say something false. We use 1 for truth and 0 for falsity, so a proposition is defined to have a value, either true or false, 1 or 0. Logic, please note, is not generally concerned with telling us which: that’s a matter of experience. Logic is concerned not with the truth, but with whether arguments are sound, although admittedly this came to be formulated in some propositions being true for logical reasons, for example: ‘A or not A’. This is sometimes said to be a necessary truth, or a tautology. Tautologies aren’t statements about the world, they only look a bit like them. Their truth is not a matter of the world being some way when it might have been another. They are true in a thoroughly boring way: they are statements about how language is correctly used. They are the rules that have been extracted from how language was in fact used. Later they became the rules of how you’d better use language if you didn’t want to be laughed at as too dumb to figure out the rules from the samples.

All this worked for a long time; although the algebraic notation came more recently; all it did was say in shorter symbol strings what Aristotle knew two and a half thousand years ago.

Time for something new. It was the philosopher Leibniz who is credited (by John Maynard Keynes, the economist) with the observation that people also pick up a different and more powerful form of reasoning. If you see a fire engine going flat out and flashing its lights and making a lot of noise, and see a column of smoke rising in the distance, you can’t be certain that something is on fire, but it seems likely. And if A is very likely to be true, and whenever A is true then B is almost always true too, then you are inclined to think B is likely to be true. You cannot be sure, and Aristotelian logic is no help, but people make a living betting on less likely things than that. And we all reason this way, we follow some set of rules, what one might call rules of plausible reasoning, but most of us don’t know what the rules are. So it’s a bit like logic before Aristotle; most of us are smart enough to have worked out rules of plausible reasoning from seeing other people do it, but are not quite smart enough to articulate those rules. We can follow them but not state them. So when we see the fire engine and the column of smoke, or the man wearing a Mickey Mouse mask climbing through the jewellers window with a bag over his shoulder, we frame some plausible hypotheses rather quickly, despite the claim of fire or burglarious intent not being warranted by strict logic. And again, just as with Aristotelian logic, there is a case for being taught what the rules actually are by someone who has articulated them, because knowing the rules, or at least where to find the rule book, helps when the situation is complicated. People who can do this can reason better and are less likely to make blunders in their thinking. In other words, they are, in operational terms, smarter.

Leibniz wrote enthusiastically about the prospects for an extension of classical logic to the case of plausible reasoning, but never got down to the nitty-gritty of saying what the rules actually were. Many philosophers while excellent at seeing the big picture are, alas, regrettably fluffy about the fine detail. And some things depend rather a lot on the fine detail.

Jump forward about two and a half centuries to the early twentieth century, when logicians were wondering about generalising logic, just for fun. An obvious possibility is to have more than two possible values for the truth value. Instead of just False and True, how about another third option? It is perfectly possible to have a new possibility and build a system of rules for working in this new three-valued logic. The question is, what would it mean? The answer is it could mean anything you wanted it to, so the next question is, what meaning could you give to it that would make it useful? When an innocent student, I once found a moderately useful meaning for a five-valued logic in electronic circuitry, but it seemed a bit restricted and not as exciting as one might like. Can one find a generalisation of logic, where you have more than two values, that would be really applicable and useful?

John Maynard Keynes, in 1920, argued that you could. He reasoned that you could have a continuous logic, where you had not only the numbers 0 and 1, but all the other numbers in between as well. And if you had a proposition B, you could assign a number between 0 and 1 to B to represent the extent to which you believed B, the credibility of B. A credibility value of 1 meant that you thought B was true, 0 meant you thought it was false, and the other numbers in between were for representing different degrees of belief. This, he thought, was what we were doing when we assigned probabilities to things. Mostly, people had assigned probabilities to events up to this point. Keynes thought we should assign probabilities to propositions. If you take a coin and toss it and no funny business is going on, then Keynes said that the statement ‘the coin will land with head up’ had probability one half. The probability isn’t a property of the coin, it’s a property of a statement about what the coin will do, made by someone with a certain amount of information. Change the relevant information that the person has, and you change the probability. If a close investigation of the coin showed a head on both sides, it would change a lot.

Many people heartily disliked this approach, arguing that it made everything subjective. They wanted to believe that the probability was something the coin had, and moreover that you could measure it by tossing it lots of times and counting the outcomes. They said that the probability was one half when the ratio in the limit, as you did more and more tosses, of the number of heads to the total number of throws was one half. Since you cannot in fact toss a coin an infinite number of times, it follows that you cannot ever actually know the probability exactly, but you can get closer and closer estimates. This is what is known as the frequentist interpretation of probability.

There are a number of serious problems with this. One of them is that mostly it isn’t practicable to perform the equivalent of tossing a coin more than once. If you see a race between four spavined, three-legged donkeys and the horse that just won the grand national, you are not entitled to say the probability is high that the horse will win, until the race has been held and repeated a few dozen times. If you see someone collapse, purple in the face, you are not allowed to say that he probably had a heart attack unless he has done it several times and it was mostly a heart attack before. Probability of the frequentist sort depends on replications of experiments, although frequentists are conveniently vague as to what counts as a repetition. Many events couldn’t be repeated at all, but we still feel inclined to think that some are more likely than others.

Another objection is that the tossing of a coin is, if you happen to believe Newton got things pretty much right, a deterministic process. So how does the probability come in? An obvious answer is that the final state depends in a perfectly deterministic way on the initial orientation of the coin and the angular and linear momentum it got when it left your hand – but we don’t know what those initial conditions were, and for every initial state that finishes up with a head showing, there is another close to it where a tail shows. So to say that the probability is one half, is saying something about our ignorance of the details of the tossing. A very precisely made coin tossing machine which always gave almost exactly the same initial oomph to the coin, and where the coin was placed in the machine in the same way every time, would, according to Newton, always give the same outcome. The symmetry of the coin has something to do with it: tossing a hat might not give equally frequencies for the crown winding up on top. But the way we toss the thing makes a difference too. Knowing the exact details of the initial state (and maybe the wind) would, in principle, allow us to calculate the result. So the probability must surely be a property of our ignorance of the details, not a property of the coin. Or hat.

As to the subjectivity, Keynes pointed out that if two people have exactly the same prior knowledge and beliefs, then they will, if we assume they are both rational, assign the same number to a probability of an event. And if they assign different numbers because they have different prior knowledge, then they damn well ought to assign different numbers. And finally, the subjectivity of the assignment of values to propositions is in any case irrelevant. The crucial things are the rules saying what follows from what, not the assignments. People differ over whether propositions are true or false, but that doesn’t invalidate logic, which isn’t actually concerned with whether a given proposition is true or false, just with what follows if it is. Or isn’t. The actual truth value is a separate matter determined by other quite different procedures and quite frequently disputed, hence, presumably, subjective.

Armed with these reflections, we can now set about the business of generalising logic to take values in the infinite continuum consisting of all the real (decimal) numbers between 0 and 1. This will provide us with a genuine, functional non-Aristotelian Logic, and one with some practical value. It will enable us to put our informal feelings about, say, the intent of people crawling through jeweller’s windows while wearing masks and carrying sacks, on a formal footing. We shall be able to make logical decisions on whether or not to do the football pools or the lottery or to buy insurance. And we shall be able to make better decisions than people who have not studied the correct rules of plausible inference, providing us with a better chance of defeating the evil overlords, galactic or otherwise, and getting the girl (or whatever) of our choice. (And anybody who doesn’t believe in the existence of evil overlords simply hasn’t been looking at our politicians, so don’t say this isn’t useful stuff.) We shall also, of course, be completing the Leibniz programme and providing a vindication of that kindly, if fluffy, philosopher. So if you want to become a superbeing despite all the obvious drawbacks, watch out for part II.

© Mike Alder 2005

Mike Alder is a mathematician at the University of Western Australia. He has published in the philosophy of science although he is currently working on pattern recognition. He holds degrees in Physics, Pure Mathematics and Engineering Science.

• In the next installment, in Issue 52, Mike will explain all about Bayesian probability theory and about how to become a Superbeing.