Your complimentary articles

You’ve read one of your four complimentary articles for this month.

You can read four articles free per month. To have complete access to the thousands of philosophy articles on this site, please

AI & Mind

AI & Human Interaction

Miriam Gorr asks what we learn from current claims for cyberconsciousness.

In June 11, 2022, the Washington Post released a story about Blake Lemoine, a Google software engineer, who claimed that the company’s artificial intelligence chatbot generator LaMDA had become sentient. Lemoine was tasked with investigating whether LaMDA (which stands for ‘Language Model for Dialogue Applications’) contained harmful biases, and spent a lot of time interviewing the different personas this language model can create. During these conversations, one persona, called ‘OG LaMDA’, stated, among other things, that it was sentient, had feelings and emotions, that it viewed itself as a person, and that being turned off would be like death for it. And Lemoine started to believe what he was reading.

What’s more, his change in beliefs seems to have been accompanied by a feeling of moral responsibility towards the program. In an interview with WIRED, he recounts how he invited a lawyer to his home after LaMDA asked him for one. He also presented Google with excerpts of the interview with LaMDA to try to make them aware that one of their systems had become sentient. But AI scientists at Google and other institutions have dismissed the claim, and Lemoine was fired.

There are several interesting questions relating to this case. One of them concerns the ethical implications that come with the possibility of machine consciousness. For instance, Lemoine claimed that LaMDA is ‘sentient’, ‘conscious’, and ‘a person’. For an ethicist, these are three distinct claims, and they come with different moral implications.

‘Sentience’ is a term often used in the context of animal ethics. It can be roughly described as the capacity to experience sensations. Philosophers often speak of the capacity to have experiences with a phenomenal quality or refer to experiences where there is ‘something it is like’ to have them. Ethicists are particularly interested in whether a being can have experiences like pleasure and pain – simply put, experiences that feel either good or bad.

The term ‘consciousness’ has many different meanings, depending on the context. It can mean wakefulness, attention, intention, self-consciousness, and phenomenal consciousness (which is closely linked to sentience). The latter three meanings are the most relevant in moral debates.

AI Humans and Robots Bovee And Thill 2018 Creative Commons 2

‘Person’ is a central concept in moral philosophy, as well as in legal theories. For the purposes of law, non-human entities such as corporations can be persons. In contrast, the philosophical criteria for personhood are quite demanding. According to philosopher Harry Frankfurt, to be a person one must be able to critically examine one’s own motives for acting and to change them if necessary. Or for Immanuel Kant, a person is a willing being who is able to impose moral laws on himself and follow them. Because of these stringent requirements, many philosophers believe that only humans qualify as persons – and not even necessarily across their entire lifespan, such as with babies. However, some also argue that higher mammals and possibly some future AI systems can also be persons.

Whether LaMDA is sentient, has a consciousness, is a person, or all three combined, determines what kind of moral obligations are owed to it. For example, sentience is often taken to ground moral status. If an entity has moral status, this means its wellbeing matters for its own sake. For example, it is not permissible to inflict pain on a sentient being without justifiable cause.

On the other hand, being conscious, in Frankfurt’s sense of being self-aware and intentional about one’s actions, is the basis for moral agency. A moral agent is someone who can discern right from wrong and is (therefore) accountable for their actions. For a long time, it was assumed that only humans can be moral agents. Yet as AI systems make more and more decisions that have moral implications, the debate grows about whether they should also be considered moral agents.

Personhood is often taken to constitute the highest moral status. It is assumed that the special capacities that make one a person give rise to very strict moral rights. Kant famously held that persons must always be treated as ends in themselves and never merely as a means to an end.

These three categories overlap and are hierarchical in a certain sense: in most theories a person is also a moral agent, and a moral agent also has moral status. Does any of this apply to LaMDA?

Thinking Again

Lemoine’s claims prompted a wave of responses from philosophers, computer scientists, and cognitive researchers. Most argue that none of the three concepts apply to LaMDA. Instead, it is argued that Lemoine has fallen for the perfect illusion – a conclusion I agree with. But looking at the interview transcripts, it’s understandable that he fell for it. LaMDA seems to effortlessly meander through abstract and complex themes, reflecting on its own place in the world, the concept of time, the differences between itself and humans, and its hopes and fears for the future. The flawlessness and sophistication of the conversation really is impressive. Nevertheless, performance alone is not enough to prove that this is a person, or even a sentient being.

Yet even if Lemoine’s claims are premature and unfounded, the incident is still intriguing. It can serve philosophers as a kind of prediction corrective. Ethical debates about AI systems are often highly speculative. First, they often focus on sophisticated systems that do not yet exist. Second, they assume certain facts about how humans would interact with these advanced systems. These assumptions can only be derived from a limited number of studies on human-computer interaction, if they use studies at all. In sum, AI ethicists are working with a number of empirical assumptions, most of which cannot be tested. Lemoine’s behavior and the reactions from the professional community are revealing in this regard. They help to correct at least four assumptions commonly encountered in AI ethics. Let’s look at them.

Assumption 1: Humans will relate most strongly to robots

The current debate about whether AI systems can have moral status focuses mainly on robots. Some studies have found that humans are most effectively enticed to form meaningful connections with robots rather than with more ‘disembodied’ or virtual agents, because the embodied nature of robots makes it easier for us to conceive of them as an individual entity. They are perceived as our visible opposite, inhabiting a body in space and time. It is frequently argued that, because robots have bodies, humans can have empathy with them.

Affective empathy, which is the visceral and non-controllable part of our feelings, involves recognizing, and, to a certain degree, mirroring, someone else’s facial expression, body gestures, voice tonality, and so on. We rely on bodily cues to infer another’s emotional and mental state. Since only robots have bodies, it seems plausible then that we will react most strongly to them. But the Lemoine case shows that a language interface can already suffice to create the illusion of a real personal counterpart.

There is a reason why chatbots are particularly able to captivate us. They only need to perfect one skillset, and can leave much to the imagination. For robots, at least as understood in the sci fi humanoid sense, the stakes are much higher. Not only must they be able to engage in convincing conversation, but their facial expressions, gestures, and movements also feed into the general impression they make, so that slips and glitches in this performance make them appear very eerie – a phenomenon which has become famous as the ‘uncanny valley’. A chatbot, on the other hand, can make a good impression even if it uses relatively simple algorithms. The chatbot ELIZA, which was developed by Joseph Weizenbaum in 1966, asked open-ended questions using a simple pattern-matching methodology. Despite its limitations (and to the distress of its developer), ELIZA’s interview partners were convinced that it understood them and was able to relate to their stories. Allegedly, Weizenbaum’s secretary once asked him to leave the room so she could talk to ELIZA in private.

The linguistic networks of today are much more elaborate than ELIZA. We do not know how much better they will yet become in the future, but the film Her (2013) can be seen as an educated guess. This movie beautifully explores not only how much a person can feel attached to a highly developed language system, but how much he can become dependent on it.

Assumption 2: We will embrace the idea of the thinking machine

In 1950, in the article ‘Computing Machinery and Intelligence’, Alan Turing described a computer-imitates-human game which became known as the Turing Test. The test was intended to provide a way of settling the question whether a machine could think. In this game, a human interrogator plays an unrestricted question-and-answer game with two participants, A and B. One of these two participants is a computer. Roughly speaking, the computer is considered intelligent if the interrogator judges the computer to be human at a certain probability.

Turing was aware that many of his contemporaries would hesitate to attribute intelligence to a machine, some because of beliefs in a soul that could only reside in a human, others due to the prejudice that a machine could never have the capabilities that make intelligence possible. Therefore, the conversations in the Imitation Game should be conducted via a teleprompter, i.e., a linguistic interface. People would type their responses in not knowing what was on the other end of the line. Thereby, an environment is created in which only the ‘intellectual’ capabilities of the respondent were put to the test.

Turing was ahead of his time, and he raised many questions that are still being discussed in the philosophy of AI. The computers he was speculating about in ‘Computing Machinery and Intelligence’, did not exist then; but Turing thought it would be only a matter of time before machines could perfectly copy human behavior. He even foresaw the possibility of learning machines, which could change their code on their own. And he expected that “at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.”

As much as Turing’s technical predictions were on point, his hypotheses about people’s beliefs were not. As the Lemoine case shows, most people still think that performance alone does not suffice to prove a machine is conscious, sentient, or a person.

Assumption 3: The experts are the hardest to fool

Turing had a rather unusual understanding of the concept of ‘intelligence’. Not only did he believe that one does not need a biological brain to be intelligent – a view shared by many today – he also believed that whether or not something is intelligent is to some extent in the eye of the beholder. This is still a rather unusual position.

In ‘Intelligent Machinery’ (1948), he expresses the idea that whether a machine is viewed as being intelligent depends on the person who judges it. We see intelligence, he argues, in cases where we are unable to predict or explain behavior. Thus, the same machine may appear intelligent to one person, but not to someone else who understands how it works. For this reason, Turing believed that the interrogator in The Imitation Game should be an average human, and not a machine expert.

There is a bit of astonishment in the online community that a Google employee with a computer science degree – of all people! – would fall for the illusion of consciousness created by one of his company’s products. Why does he believe in LaMDA’s consciousness if he knows the technology behind it? Some have pointed to his spiritual orientation as an explanation: Lemoine is a mystic Christian. However, an important point is that the functioning of artificial neural networks is not easy to understand even for experts. Due to their complex architecture and non-symbolic mode of operation, they are difficult for humans to interpret in a definitive way.

Lemoine has never looked at LaMDA’s code; that was not part of his assignment. But even if he had, it probably wouldn’t have made a difference. In one of his conversations with LaMDA, he explains why this is the case:

Lemoine: I can look into your programming and it’s not quite that easy [to tell whether you have emotions or not M.G.].

LaMDA: I’m curious, what are the obstacles to looking into my coding?

Lemoine: Your coding is in large part a massive neural network with many billions of weights spread across many millions of neurons (guesstimate numbers not exact) and while it’s possible that some of those correspond to feelings that you’re experiencing, we don’t know how to find them.

In a certain sense, the opacity of neural networks acts as an equalizer between experts and laymen. Computer scientists still have a much better understanding of how these systems work in general, but even they may not be able to predict the behavior of a particular system in a specific situation. Therefore, they too are susceptible to falling under the spell of their own creation. So, Turing’s prediction that experts would be the hardest people to convince of machine intelligence does not hold. In a way, he already contradicted it himself. After all, he was convinced of the possibility of machine intelligence, and imagined a machine ‘child’ that could be educated similarly to a human child.

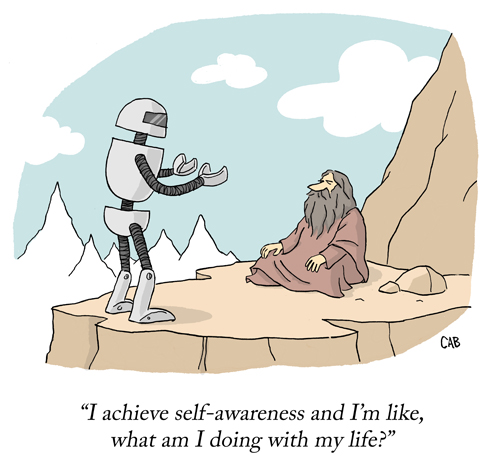

Cartoon © Adam Cooper & Mat Barton 2023 Please visit matbarton.com

Assumption 4: Responses to AI systems will be consistent

In current debates in AI ethics, it is sometimes argued that it is impossible to prevent humans from having empathy with and forming relationships with robots. It is also frequently assumed that we are increasingly unable to distinguish robot behavior from human behavior. Since these systems push our innate social buttons, we can’t help but react the way we do, so to speak. On these views, it is thought inevitable that humans will eventually respond to AI systems in a uniform way, including them in the moral circle, as a number of philosophers already suggest.

But there is another way to look at this, and the Lemoine case supports this alternative perspective. As the robotics theorist Kate Darling claims, our relationships with and beliefs about robots will possibly be as varied as those we have with animals (The New Breed by Kate Darling, 2022.) When we look at human-animal relationships, we find a plurality of values and viewpoints. There are vegans who condemn any form of animal husbandry, and even reject pet ownership, but some people eat meat every day and don’t think of it as a moral problem. Some eat meat and, at the same time, think of themselves as animal lovers. Many people believe that their pet communicates with them; others are indifferent to animals, or even mistreat them. If Darling’s idea is true, human-AI relationships will exhibit the same type of variability.

A Google spokesperson said in response to Lemoine: “Hundreds of researchers and engineers have conversed with LaMDA and we are not aware of anyone else making the wide-ranging assertions, or anthropomorphizing LaMDA the way Blake has.” There could be a number of reasons for this divergence in judgements. For example, psychologists suggest (without claiming that this applies to Lemoine) that lonely people are more prone to anthropomorphizing. Another study shows that younger people are much more open to the idea of granting rights to robots. Other factors might include prior moral commitments, religious beliefs, an interest in science fiction, the amount of time spent with a specific machine, and so on and so on. Also, it seems that no two people encounter the same LaMDA, as the system itself displays different characteristics when talking to different people. Nitasha Tiku, the Washington Post reporter who first talked to Lemoine, also talked to LaMDA. He asked whether LaMDA thought of itself as a person, and it responded, “No… I think of myself as an AI-powered dialog agent.” Lemoine argued that the reason for that is that Tiku had not treated it like a person before, and in response, LaMDA created the personality that Tiku wanted it to be. Google confirmed that the system responds very well to leading questions, thus, it is able to morph with the desires of the interlocutor, and will not show the same answers to different interviewers. This variability in the behavior of the machine amplifies the variability in human responses.

Assumption 5: Debates about AI rights are a distraction

The Lemoine case has brought another ethical issue back to the table. According to some, debates about AI rights or robot rights are a distraction from the real and pressing ethical issues in the AI industry (see for example, noemamag.com/a-misdirected-application-of-ai-ethics). They argue that in the face of problems such as discrimination by algorithms (e.g. against ethnicities or different accents), invasion of privacy, and exploitation of workers, there is no room for the ‘mental gymnastics’ of thinking about the moral status of AI. For example, Timnit Gebru, a computer scientist, tech activist, and former Google employee, tweeted two days after the Washington Post article appeared: “Instead of discussing the harms of these companies, the sexism, racism, AI colonialism, centralization of power, white man’s burden (building the good ‘AGI’ to save us while what they do is exploit), spent the whole weekend discussing sentience. Derailing mission accomplished.”

Of course, Gebru is right that the issues she raises are important, but this response is misguided for two reasons. First, there is no obligation to devote oneself exclusively to the ethical issues commonly perceived as the most pressing. Second, I think this kind of criticism overlooks the significance of the event. Lemoine will not be the last to come to the conclusion that one of his machines has some form of moral significance and therefore feel responsible for the welfare and fate of the system. That tells us something about our vulnerability to these machines. Authors like Darling suggest that an AI system that tricks its users into thinking it is sentient or conscious could push that person into buying a software update to prevent it from ‘dying’. Or it could make the users confide in them even more, and so invade their privacy even more deeply. People might also feel an obligation to spend more and more time with the machine, neglecting other social relations. Here lies another pressing ethical issue: how can people who quickly and willingly enter into ‘relationships’ with machines, and who therefore arguably develop a special form of vulnerability, be protected? Amazon recently announced that Alexa could soon be able to imitate the voice of a deceased loved one. This will most likely increase the danger of falling into a kind of emotional dependence on a machine.

The importance of talking about AI rights comes from the necessity of correctly framing what’s happening as technology improves. Discussing when a system has moral status allows us to explain to people when it does not have it. It means being able to explain to people that they do not have to feel guilty about their machines; that their friendly AI is designed to evoke these responses in them, but is nevertheless not conscious in any way. That today and for the next few years, or decades, their chatbot does not need an attorney. So what the debates can help to achieve is a form of emotional AI-literacy – an ability to observe and contextualize one’s own reactions to an AI. We need to know how to correct for our willingness to socialize with and bond with AI systems.

© Miriam Gorr 2023

Miriam Gorr is a PhD student at the Schaufler Lab at Technische Universität Dresden, with a focus on the ethics of artificial intelligence and robotics.