Your complimentary articles

You’ve read one of your four complimentary articles for this month.

You can read four articles free per month. To have complete access to the thousands of philosophy articles on this site, please

AI & Mind

Can Machines Be Conscious?

Sebastian Sunday Grève and Yu Xiaoyue find an unexpected way in which the answer is ‘yes’.

Alan Turing thought that it was possible (at least in theory) to make machines that enjoyed strawberries and cream, that British summer favourite. From this we can infer that he also thought it was possible (again, at least in theory) to make machines that were conscious. For you cannot really enjoy strawberries and cream if you are not conscious – or can you? In any case, Turing was very explicit that he thought machines could be conscious. He did not, however, think it likely that such machines were going to be made any time soon. Not because he considered the task particularly difficult, but because he did not think it worth the effort: “Possibly a machine might be made to enjoy this delicious dish, but any attempt to make one do so would be idiotic,” he wrote in his influential ‘Computing Machinery and Intelligence’. He added that even mentioning this likely inability to enjoy strawberries and cream may have struck his readers as frivolous. He explains:

“What is important about this disability is that it contributes to some of the other disabilities, e.g. to the difficulty of the same kind of friendliness occurring between man and machine as between white man and white man, or between black man and black man,”

thus reminding us, as he was wont to do, that humans have always found it difficult to accept some other individuals even within their own species as being of equal ability or worth. So he says that the importance of machines likely being unable to enjoy strawberries and cream resides in this being an example of a broader inability on the part of machines to share certain elements of human life.

He got that right, at least in principle – just as he accurately predicted the success of artificial neural networks, machine learning in general, and reinforcement learning in particular. However, Turing was wrong to predict – as he explicitly and repeatedly did – that no great effort would be put into making machines with distinctively human but non-intellectual capabilities. Rather, the growing demand for chat bots, virtual assistants, and domestic robots, clearly shows the opposite to be true. If machines can be made to be conscious, we probably will engineer them, sooner or later. Furthermore, since most people think that consciousness in general makes a big difference, not least from an ethical perspective, the question of whether machines can be conscious appears sufficiently important that more people should learn to ask it.

The first thing to do in answering the question is to specify what we mean by ‘machine’. When Turing considered whether machines can think, he restricted ‘machines’ to mean digital computers – the same type of machine as the vast majority of our modern-day computing devices, from smartphones to supercomputers. At the time he was writing, around 1950, he had just helped to make such a machine a reality. Incidentally, he also provided the requisite mathematical groundwork for computers, in the form of what is now known as the Universal Turing Machine. So Turing still had a good deal of explaining to do, given the novelty of computers at the time. Today, most people are at least intuitively familiar with the basic powers of computing machinery, so we can save ourselves a detailed theoretical account. In fact, we need not restrict what we mean by ‘machine’ to digital computers. As will be seen, the particular way of asking whether machines can be conscious that we present here only requires us to stipulate that the relevant engineering is not primarily of a biological nature.

By far the trickier part of asking whether machines can be conscious is to determine what one should take the word ‘conscious’ to mean. To be sure, humans are intimately familiar with consciousness, insofar as an individual’s consciousness just is their subjective experience. On this common meaning of the term, consciousness is that special quality of what it is like to be in a particular mental state at a particular time. It is this same special quality that many people are inclined to think must be missing in even the most sophisticated robots.

But the main difficulty in asking ‘Can machines be conscious?’ is that, despite our natural familiarity with consciousness, we are still ignorant of its fundamental nature. There is no widely agreed-upon theory of what consciousness is, and how we can tell when it is present. We certainly do not know how to build it from the ground up. The trick, as we shall see, is to circumvent this ignorance and make use of our basic familiarity instead.

Man Made

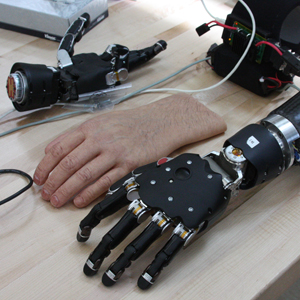

Prosthetic Limb US Navy 2012 Public Domain

Can we think of any promising way to engineer a conscious thing? Of course, there is procreation; but this would fall foul of our condition that the method of engineering not be of a primarily biological nature. The same holds for regenerative methods such as neural stem cell therapy and tissue nano-transfection, which turns skin cells into functional nerve cells. Both procreation and regeneration can be used to engineer conscious things, in particular humans, either directly or indirectly (by restoring relevant functions). However, due to the primarily biological nature of the methods employed, we will naturally consider the outcome to be not conscious machines, but humans.

Consider now a similar but different method. Recent advances in biological engineering have achieved functional restoration of part of the human nervous system, in the form of prosthetic limbs that are connected with the brain in both directions, thus enabling fine motor control and proprioception (intuitive knowledge of limb position), as well as reduced phantom limb perception. The technology is still in its early stages, but it already provides empirical proof of the idea that parts of the human nervous system can be restored using semiconductor materials such as silicon. It seems reasonable to expect that this and related ongoing research on the human–machine neural interface will yield further advances in the future, so that more parts of the nervous system can be restored or replaced, also using materials such as silicon. In the future, a quadruple amputee might be able to regain full arm and leg functionality, including haptic perception, proprioception, thermoception, and so forth.

Now suppose the following three things (which should seem fairly commonsensical) are true. First, the human nervous system, including the brain and the spinal cord, is constitutive of human consciousness. This means that for anything happening in the human mind there is nervous system activity underpinning it. Second, an individual’s conscious states normally include limb-based sensory experience, because the nervous system extends into the limbs. Third, some amputees’ conscious states include prosthetic limb-based sensory experience, because relevant parts of the nervous system have been artificially restored, as in the above example. It follows from this that such a prosthetic limb is itself partly constitutive of the individual’s consciousness, because their nervous system extends into their prosthetic limb. Since the relevant method of engineering a prosthetic limb is not of a primarily biological nature, we can conclude that a machine is partly constitutive of their consciousness. However, it does not follow from this that machines can be conscious. For it is of course possible that some human part is ultimately necessary. This may seem particularly plausible given the fact that the integration of machine parts into the nervous system considered thus far merely affects the peripheral, not the central, nervous system, i.e. not the spinal cord or brain.

However, it is not obvious what relevant difference it makes whether central or not-so-central parts are replaced. To be sure, there are many important differences between the central and peripheral systems; a foot is not a brain. Yet at a certain level of abstraction, central and peripheral parts of the nervous system are indeed the same kind of thing (namely, nerve activity), and so the theoretical possibility of replacing any given part of the nervous system becomes difficult to deny.

To reiterate, following recent advances in biological engineering, it seems reasonable to expect that future research on the human–machine neural interface, as well as on physical neural networks, memory resistors, and memristive systems, for example, will enable the restoration or replacement of more and more parts of the human nervous system using materials such as silicon.

Now imagine the following scenario:

A hundred years from now, after a century of steady technological progress, Thesea, while still young, begins to suffer from a degenerative disease of the nervous system. Thesea is lucky, though, insofar as implant surgery is available to her whenever she needs it. She is lucky too that the intervals between surgeries are long enough that new parts of her nervous system can always be properly integrated – thanks to various kinds of therapy, as well as her system’s continued neuroplasticity – before another part needs to be replaced.

How much of Thesea’s nervous system would need to be replaced by implants in order for her to be considered a machine rather than a human? Different people will, inevitably, give different answers. Some may consider it necessary that all of Thesea’s nervous system, including her brain and spinal cord, or even her whole body, be replaced before we call her a machine. Either way, the transformative principle remains the same, so that anyone who accepts recent advances in prosthetics as proof that parts of the human nervous system can be restored using a material such as silicon should, on this basis, be able to agree that Thesea could eventually become a machine. Moreover, they will be able to accept that Thesea will eventually become a machine that is conscious just like humans are (or, at any rate, just like Thesea used to be).

Some will no doubt want to object to the supposed psychological continuity throughout Thesea’s gradual transformation. This kind of objection may take aim at either the supposed continuity of Thesea’s consciousness, or, less directly, the continuity of her personal identity (since lack of personal identity would also make doubtful the supposed persistence of consciousness). To prove the validity of their qualms, such an objector would have to explain where, in their opinion, things would likely go wrong. Presumably they believe that there is a point at which the process of transformation will become significantly more involved, and it may be finally impossible to entirely replace a biological brain with a synthetic one.

It would of course be of great scientific interest to discover any such point of ‘no further progress’ along the proposed transplant trajectory. But until we get there, one will at least be reasonably justified in believing that, given enough time, and taking the smallest steps possible, the human nervous system can be replaced by parts of a different material, such that a human may be slowly turned into a machine whilst still retaining consciousness.

© Sebastian Sunday Grève and Yu Xiaoyue 2023

Sebastian Sunday Grève (Chinese Institute of Foreign Philosophy and Peking University) and Yu Xiaoyue (Peking University) are philosophers based in Beijing, where they are working on the cognitive foundations of human-machine relations, in close collaboration with colleagues from other disciplines.