Your complimentary articles

You’ve read one of your four complimentary articles for this month.

You can read four articles free per month. To have complete access to the thousands of philosophy articles on this site, please

Tallis in Wonderland

The Fantasy of Conscious Machines

Raymond Tallis says talk of ‘artificial intelligence’ is neither intelligent nor indeed, intelligible.

Being bald means that you can’t tear your hair out in lumps. Consequently, I have to find other ways of expressing exasperation. One such is through a column inflicted on the readers of Philosophy Now (who may justly feel they deserve better). And the trigger? Yet another wild and philosophically ill-informed claim from the artificial intelligentsia that conscious machines are, or soon will be, among us.

An engineer at Google recently attracted international attention by claiming that the company’s chatbot development system – Language Model for Dialogue Applications (LaMDA) – had shown the signs of sentience by its seemingly thoughtful and self-reflexive answer to being questioned as to what it was afraid of. It was, it confessed, afraid of being turned off – in short, of its own death.

It ought to be obvious that LaMDA was not aware of what it was ‘saying’, or its significance. Its answer was an automated output, generated by processing linguistic probabilities using the algorithms in its software. Its existential report was evidence then, not of its awakening into a sentient being, but of its unconscious aping of sentience for the benefit of an actually sentient being – in this case the engineer at Google. So why the hoo-ha? It’s rooted in longstanding problems with the way we talk about computers and minds, and the huge overlap in the vocabulary we use to describe them.

Mind Your Language

Ascribing mentality to computers is the obverse of a regrettable tendency to computerize human (and other) minds. Computational theories of minds are less popular than they were in the latter half of the last century, so it’s no longer taken for granted that manifestations of consciousness are to be simply understood as a result of computational activity in the wetware of the brain. However, there remains a tendency to look at computers and so-called ‘artificial intelligence’ through the lens of mentalising, even personifying, discourse. Their nature as semi-autonomous tools, in which many steps in the processes they facilitate are hidden, seems to license the use of language ascribing a kind of agency and even a sense of purpose to them. But when we describe what computers ‘do’, we should use inverted commas more liberally.

The trouble begins at the most basic level. We say that pocket calculators do calculations. Of course, they don’t. When they enable us to tot up the takings for the day, they have no idea what numbers are; even less do they grasp the idea of ‘takings’, or what the significance of a mistake might be. It is only when the device is employed by human beings that the electronic activity that happens in it counts as a calculation, or the right answer, or indeed, any answer. What is on the screen will not become a right or wrong answer until it is understood as such by a conscious human who has an interest in the result being correct.

Reminding ourselves earlier of the need for inverted commas in our descriptions of computer activities might have also prevented misunderstandings around some of the more spectacular recent breakthroughs in computing. It is often said that computers can now ‘beat’ Gary Kasparov at chess (Deep Blue), Lee Sedol, the world champion at Go (AlphaGo), and the greatest performers at the quiz game ‘Jeopardy’ (Watson). This is importantly inaccurate. Deep Blue did not beat Gary Kasparov. The victors were the engineers who designed the software. The device had not the slightest idea of what a chessboard was, even less of the significance of the game. It had no sense of being in the location where the tournament was taking place, and had nothing within it corresponding to knowledge of the difference between victory and defeat.

We could easily summarize the way in which pocket calculators and the vastly more complex Deep Blue are equally deficient: they have no agency as they are worldless. Because they lack the complex, connected, multidimensional world of experience in which actions make sense, and hence count as actions, it is wrong to say that they ‘do’ things. And increasing the power, the versatility, and the so-called ‘memory’ of computers, or the deployment of alternative architectures such as massively parallel processing, does not bring them any closer to understanding the nature and significance of what they are ‘doing’. My smartphone contains more computing power than the sum of that which was available worldwide when I went to medical school, and yet it is you and I, not our phones, who make the call – who exchange information.

This situation will not be altered by uniting the processes in Deep Blue with any amount of ‘artificial reality’. A meta-world of electronically coded replicas of the world of the chess player would fall short of an actual world in many fundamental ways, even if it were filled out in precise, multi-dimensional detail. Merely replicating features of a world won’t make that world present to that which replicates it, any more than the mirror image of a cloud in a puddle makes the cloud present to the puddle. Replication does not secure the transition from what-is to that it is or the fact that it is in or for a perceiving mind (but that is a huge story for another time!).

Criteria for Consciousness

I have already indicated why we think of computers as agents, or proxy agents, when we do not consider other tools in this way. Many of the steps that link input with output, or connect our initial engagement with the machine with the result we seek from it, are hidden. Because we can leave the device to ‘get on with it’ when it enables us to perform things we could not do without its assistance, it seems to have autonomy. This is particularly striking in devices such as AlphaGo, which are programmed to modify their input-output relations in light of external ‘feedback’, so that they can ‘train’ themselves to improve their ‘performance’. Such self-directed ‘learning’ is, however, nonconscious: the device has no idea what it is learning. It has no ideas, period. Nor, to digress for a moment, do these devices remember what they have learnt in the sense that matters to humans. Truly to remember something is to be aware of it, and aware of it as being past, and in some important cases as belonging to my past. It is courtesy of such memories that I relate to myself as a person with a past, rather than simply being a present entity shaped by prior events. It means that I at time t2 reach back to some experiencer that I remember myself being at time t1. This is relevant when deciding whether a LaMDA chatbot should be regarded as the kind of self-reflexive being suggested by its ‘answer’ to questions about its fears. Feedback loops in circuitry do not deliver that kind of awareness.

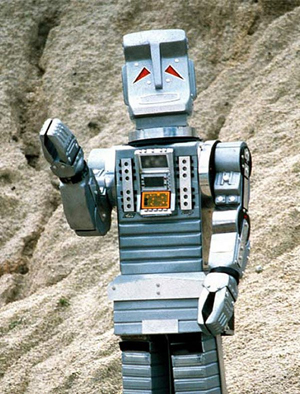

“Your plastic pal who’s fun to be with.”

Marvin The Paranoid Android, from The Hitchhiker’s Guide To The Galaxy © BBC Television 1981

Many will accept all this, but still find it difficult to resist thinking of advanced computational networks as intelligent in the sort of way that humans are intelligent. ‘Artificial intelligence’ in fact usefully refers to the property of machines whose input-output relations assist their human users to perform actions that require the deployment of intelligence. It is, however, misleading if the transfer of the epithet ‘intelligent’ from humans to machines is taken literally, for there can be no real intelligence without consciousness.

That should not need saying, but it is widely challenged. The challenge goes all the way back to a conceptual muddle at the heart of Alan Turing’s iconic paper, ‘Computing Machinery and Intelligence’ (1950). There Turing argued that if a hidden machine’s ‘answers’ to questions persuaded a human observer that it was a human being, then it was genuinely thinking. If it talked like a human, it must be a human.

The most obvious problem with this hugely influential paper is that its criterion for what counts as ‘thought’ in a machine depends on the judgement, indeed the gullibility, of an observer. A naïve subject might ascribe thought to Alexa, whose smart answers to questions are staggering. Her – sorry! its – ‘modest’ willingness to point us in the direction of relevant webpages when it runs out of answers, makes its ‘intelligence’ even more plausible.

But there is a more fundamental problem with the Turing test. It embraces a functionalist or behaviourist definition of thought: a device is thinking if it looks to an observer as if it were thinking. This is not good enough. In the absence of any reference to first-person consciousness, the Turing test cannot provide good criteria for a machine to qualify as thinking, and so for genuine intelligence to be present in artificial intelligence machines. There is neither thinking, nor other aspects of intelligence, without reference to an experienced world or experienced meaning. And none of this is possible without sentience, which cannot be reducible to observable behaviour, but is a subjective experience. The Turing test, in short, does not help us to determine whether the machine is sentient, even less whether it is aware of itself or of the individuals engaging with it.

Computing the Future

It may be the case that, notwithstanding the anthropomorphic language in which we talk about electronic devices, inside or outside the world of AI engineering few people really believe that there are sentient computers – machines aware of what they’re up to while they are prosthetically supporting human agency, and conscious of themselves as agents. There are some, however, who think it’s only a matter of time. Thomas Metzinger is so concerned with this possibility (though he is careful to state that it is only a possibility and he does not suggest a timeframe) that he thinks we should impose a ban on the development of all ‘post-biotic’ sentient beings. We know from nature that consciousness is often associated with appalling suffering, and so it is a fundamental moral imperative that we should not risk this happening artificially.

But why should we think that it’s even possible? What advances in information technology would result in calculators that actually do calculations, know what they are for, take satisfaction in getting them right, and feel ashamed when they get them wrong? What increases in the power, and what modifications in the architecture, of computers would instill intentionality into their circuitry and make what happens there be about a world in which they are explicitly located, with some sense of ‘what it is like’ to be that computer? Our inability to answer this is the flip side of our bafflement as to how activity in our own neural circuitry creates a subject in a world that, courtesy of the body with which it is identified, it embraces as its own world. We haven’t the faintest idea what features of brains account for consciousness. Remembering this should cure us of two connected habits of thought: of, on the one hand, computerising minds, and, on the other, of mentalising computers. Meanwhile, we should be less modest, and refrain from ascribing to machines the intelligence we deployed in creating them.

Good to get that off my chest. I feel calmer now. Thank you for listening.

© Prof. Raymond Tallis 2022

Raymond Tallis’s latest book, Freedom: An Impossible Reality is out now.